4. Decision Mistaking

Video

Understanding Your Pet Earthling

Unlike us blorxians, earthlings have no logic chip.

This makes their decisions so . . . exciting and unpredictable.

This makes their decisions so . . . exciting and unpredictable.

After years of probing their brains, we

now have discovered 9 extraneous variables

that earthlings mix into their decision-making

algorithms. With these new insights, we can

better control their unpredictable little minds.

now have discovered 9 extraneous variables

that earthlings mix into their decision-making

algorithms. With these new insights, we can

better control their unpredictable little minds.

Earthlings remember bad outcomes more than good outcomes.

For example, if your pet earthling is playing with martians and one bites them,

they will remember that one time and forget all the times

martians did not bite them. Then they will fear all martians

and aggressively chase them out of their pen.

martians did not bite them. Then they will fear all martians

and aggressively chase them out of their pen.

Earthlings worry more about losing than they care about winning.

For example, if they find 20 monetary units on the sidewalk, they feel a little happy.

But if they drop 20 monetary units, they feel very unhappy.

Earthlings hate criticism more than they like compliments.

So even if you tell

your pet they’re a

“bad earthling” for

plundering your

food preservation unit,

your pet they’re a

“bad earthling” for

plundering your

food preservation unit,

but a “good earthling”

for bringing your slippers,

they will still feel bad.

for bringing your slippers,

they will still feel bad.

Earthlings prioritize the short term over the long term.

When you take your earthling to the vet

to get their shots, notice they will hide from you

to get their shots, notice they will hide from you

to avoid the immediate pain of the shots, even though

the eventual pain of getting rectal worms later is much worse.

the eventual pain of getting rectal worms later is much worse.

Part of their skewed logic is their inspiring but

unfounded optimism that they simply will be

lucky enough never to get a worm infestation.

unfounded optimism that they simply will be

lucky enough never to get a worm infestation.

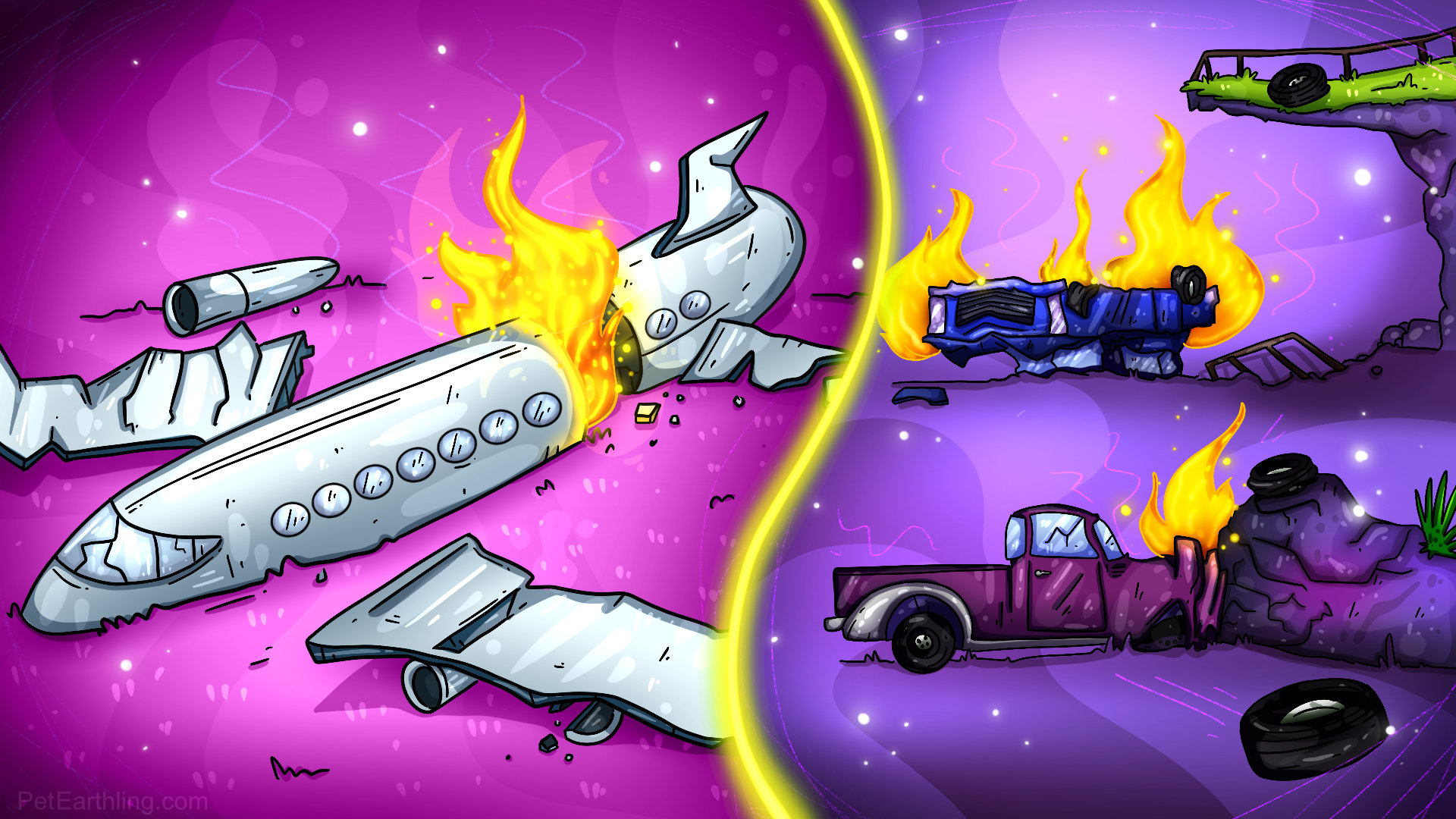

Earthlings pay more attention to rare and concentrated outcomes.

If 200 earthlings die in an airship crash,

they miscalculate that as worse

they miscalculate that as worse

than 200 earthlings dying one at a

time in separate road-car crashes.

time in separate road-car crashes.

Earthlings care more about bad intentions than accidents.

If one earthling is injured intentionally by another, they are

much more shocked than if 10 earthlings get injured accidentally.

much more shocked than if 10 earthlings get injured accidentally.

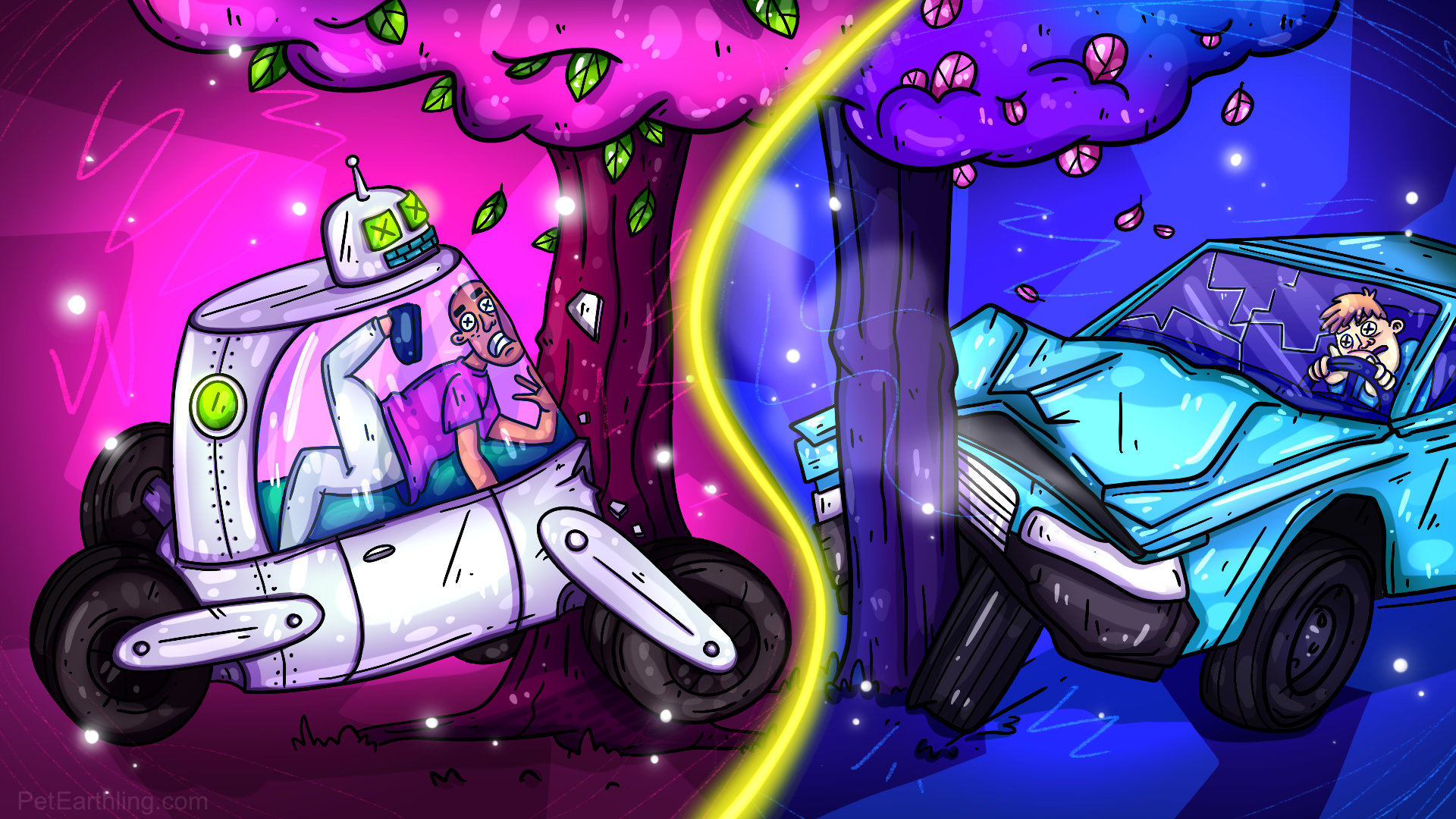

Earthlings worry more about external control than internal control.

Most earthlings would rather take their chances dying while driving

their own road-car than risk a much smaller chance of dying in a robot-car.

their own road-car than risk a much smaller chance of dying in a robot-car.

Earthlings care more about action than inaction.

If an earthling saw a trolly

about to run over and kill 5

earthlings tied to the tracks,

about to run over and kill 5

earthlings tied to the tracks,

they would rather passively let those 5 die

than to actively switch the trolly to another

track where only 1 earthling would die.

than to actively switch the trolly to another

track where only 1 earthling would die.

Earthlings care more about visible results than invisible results.

For example, earthlings would gladly

take a pill to cure bowel worms,

take a pill to cure bowel worms,

but they would be reluctant

to take a pill to prevent bowel

worms, because the lack of

bowel worms is something

they cannot see.

to take a pill to prevent bowel

worms, because the lack of

bowel worms is something

they cannot see.

Of course you’ve probably noticed that most of these rules contradict each other,

and each earthling may prioritize them differently in different situations.

and each earthling may prioritize them differently in different situations.

So just nod along and take delight

in your earthling’s amusingly

unpredictable decisions.

in your earthling’s amusingly

unpredictable decisions.

Next Chapter

Comment

Next Chapter

Comment

© 2021 Hans Ness

Imagine a Truth-o-meter that measures how clearly true or false something is:

As a reasonable person, you and the android would agree on objective Truths, like 2+2=4 and the earth orbits the sun. And you would agree on objective Falsehoods, like 2+2=5 and the moon-landing was faked.

Then there are the Fallacies, which are objectively false, but to us humans they feel true. If a bad crop follows a meteor shower, it feels like the meteor caused the bad crop, just like if autism appears after vaccination, it feels like the vaccine caused autism. But as a reasonable person you could be educated to recognize the fallacy, so you would agree with the android.

And then there is an in-between band, the Irrational Truths, where you and your android companion will have difficulty agreeing. Take for example the trolly dilemma in #8 where you must decide to let 5 people die or save them, which causes 1 other person to die. Rationally, you agree that 1 person dying is better than 5 people dying, but it still feels wrong to make that decision, so you let the 5 people die. The android cannot understand how you could be so coldhearted, and concludes you are irrational or sadistic. You partially agree.

This is that list of Irrational Truths, where we may agree with the android that is it irrational, but still do it anyway, unable to fully justify why.

“1. Earthlings remember bad outcomes more than good outcomes.”

This pessimism has a plausible origin story. Let’s say you’re a cave person, and everyday you hear a rustling in the bush, then a bunny rabbit hops out. Then one day you hear a rustling and a tiger leaps out! Assuming you survive, on the next day when you hear rustling, do you think bunny rabbit or tiger? Exactly! Your cavemate who thinks bunny eventually becomes tiger lunch, while you survive to produce cavebabies who assume the worst. So this bias is a good thing . . . until it is not:

“For example, if your pet earthling is playing with martians and one bites them, your earthling will remember that one time and forget all the times martians did not bite them. Then they will fear all martians and aggressively chase them out of their pen.”

This example shows how the same instinct could inflame other problems like racism. Any one bad experience could be used to ignore all the good experiences and exacerbate a bias.

“2. Earthlings worry more about losing than they care about winning.”

This is called Loss Aversion. It is discussed mostly for investments, but more broadly it means you consider things you own to have more value to than things you don’t own, even something with a precisely objective value like a $20 bill. So you resist selling your house when the market is down because it feels like a loss, even though any new house you would buy is also reduced because the market is down. The android thinks you may have a brain defect.

“3. Earthlings hate criticism more than they like compliments.”

This is so obvious, and yet so difficult to explain why, but let’s try: (a) One pragmatic reason to pay more attention to criticism is that it may tell you how to improve, while compliments are less useful for that. (b) Another possible reason is that we give compliments more generously but keep most critical thoughts to ourselves; therefore it is reasonable to assume that other people do the same, so any criticism said out loud is just the tip of the non-verbal spear. (c) Or maybe this is just an extension of the first two rules, where we put more weight on the negative. The android thinks we are miscalibrated.

“4. Earthlings prioritize the short term over the long term.”

We procrastinate work and chores because we’d rather play now than play later. We don’t save enough money because we’d rather spend it now than spend it later.

“Part of their skewed logic is their inspiring but totally unfounded optimism....”

This Optimism Bias means we downplay risks and assume luck, as if we were action heroes dodging a hail of bullets and not getting hit because . . . well, just because. (While this bias is a powerful catalyst, it’s not listed as its own irrational truth because a reasonable person can be educated to correct this bias, so it’s in the same range as Fallacies.)

“5. Earthlings pay more attention to rare and concentrated outcomes.”

In 2001, three thousand of people died in the 9/11 terrorist attacks. That same year over 42,000 died in car accidents in the U.S., but that didn’t get much news coverage, because it’s not rare or concentrated all in one day or event. Likewise, millions of people knowingly place a terrible bet on lottery tickets, because losing is common and gradual, while winning is rare and concentrated. The android concludes we are really bad at math.

“6. Earthlings care more about bad intentions than accidents.”

If you owned a store, and one of your cashiers stole $100 from the till, but your other cashier loses $200 every month from various mistakes, who would you fire? Assuming you must keep one, the android would keep the theif, because $200 > $100. Or: If your grandpa were killed by a hurricane, you would feel sad. If he were killed by a robber, you would feel much worse. “But why?” asks the android. Both have the same tragic result. Both could have been avoided if you knew exactly what to avoid. From our social evolution, it makes sense that intentions are important so we can tell our friends from enemies. Intention leads to social rules and laws and punishment. But in cases like this, even after the killer is caught and convicted, it still feels worse. And the android still does not understand why.

“7. Earthlings worry more about external control than internal control.”

If you accidentally set your home on fire, you would be angry at yourself. If someone else accidentally set your home on fire, you would be even more angry at them. But why? It’s more satisfying to blame someone else than to blame yourself. But still it’s an accident that anyone might have made, so the android sees no difference. We just like to be in control.

“8. Earthlings care more about action than inaction.”

Back to our bloody trolly tracks. There is actually a plausible justification for you letting 5 people die: If you switched the trolly track to kill 1 person instead, that means the responsibility is on your shoulders, and their distraught family would blame you for killing their loved one. But that’s circular reasoning: if we didn’t care more about action than inaction, there would be no guilt or blame. So the android concludes you are coldhearted and selfish.

“9. Earthlings care more about visible results than invisible ones.”

It’s easy to see how seeing is believing. A goldfish can see what is there, but I doubt it can imagine what is not there. It goes against our natural biases to prevent things that are not in front of our eyes, like future cancer or future HIV, or to observe non-events like every time a child does not get autism after getting vaccinated. The android questions the survival of our species.

Other Contenders

Really there is no hard line between Fallacies and Irrational Truths. Both are things that feel right but are rationally wrong. The only difference is that most educated people would agree to back-peddle from a fallacy, but find it difficult or impossible to back away from an irrational truth. But this is just a flimsy curtain between the two, subjectively resting on squishy words like “most” and “educated” and “difficult”, so I’ll refrain from chiseling this in stone. Certainly there may be many other human quirks that meet this criteria.

For example, what about religion? Many educated people are religious; they acknowledge they cannot prove their beliefs, but they continue to hold their beliefs because they feel correct. But is that human nature or culture? If aliens abducted a baby and raised them on their own atheist planet with no human culture, would that baby grow up to believe in religion or god? I’m guessing not, but that they still would have all the 9 biases listed above.

Other ideas I considered:

Earthlings prioritize Certainty over Uncertainty. — You are less likely to touch a hot stove than to start smoking, because you’re fairly certain the stove will burn, but less certain you would actually get lung cancer. You are less likely to try something new than to stick to what you know, because you’re certain of the status quo but less certain of any new change. This is common for foods, major life decisions, political policy, etc. However, I did not list this because I think an android would understand it well: calculations of probability are quite logical.

Earthlings prioritize Theirs over Others. — You care more about your money more than someone else’s money, your country more then someone else’s country, and your child more than someone else’s child. This raises the question of whether “Irrational Truths” should include all emotions. A dumb android v1.0 would not love anyone or anything more than any other. However, it is not a far leap for an android to conclude that their own survival has more value to them than another android’s survival, and therefore anything of “theirs” has more value to them, including their human owners.

Earthlings care about Fairness over their own interest. — In the ultimatum game another person is given $200 and they must offer you some. If you reject their offer, no one gets anything. An android would always accept any offer: even $1 is more than they would have received otherwise. But as a human you would consider a small offer unfair and reject it just to spite them. But even though this shows a big difference between humans and androids, I don’t think it’s an Irrational Truth because we humans don’t think fairness is irrational; it’s part of every society. And a more advanced android would conclude from game-theory algorithms that fairness is optimal. Also, it’s hard to say this is a universal trait because it varies so much by culture and individuals.

Please comment to help me refine/expand this draft.